AI can be a major productivity driver for organizations, helping with asset control, visibility, and risk evaluation in sprawling digital environments.

However, when it comes to exposure management — which is a more holistic approach to traditional risk management — no tool, especially AI-powered, should be allowed to run unchecked without robust guardrails in place. Improperly controlled AI workloads could lead to data exposure and uncontrolled automation. AI-assisted attackers are acting with increasing speed and potency, elevating the security of an organization’s digital property to a board-level issue.

There’s been much talk of agentic AI, meaning tools that are capable of making fully autonomous decisions. However, these tools are often given too much free reign in accomplishing their goals, and can cause unintended consequences.

AI workflows fall between the promise of AI agents and traditional automation, which can be overly rigid.

When configured and applied correctly, AI workloads can cut much of the complexity and cost from digital asset management. When done wrong, they introduce substantial risk. Getting this balance is half the battle.

AI Workflows Risks in Digital Asset Management

Some of the biggest risks introduced by AI in asset management workflows involve data exposure, access exploitation, and uncontrolled automation. Each of these has different implications for organizations and may surface differently. Because, ultimately, it's the humans who design the workflows, reasons vary from a lack of oversight and awareness to human error, absence of training, or, in the worst-case scenario, malicious intent.

Data exposure

Third-party AI processes data through its provider's servers. Sending information through it inevitably pushes it outside of your realm of control, risking exposure of confidential data. Even if the provider in question claims that they delete the information once it's processed, it may not be the case, or your often sensitive data might sit in their logged environment for longer than you expect, opening itself up for exposure down the line.

If data provided to third-party AI is used in model training, fragments of your data could show up in generated outputs for other customers, an AI security and privacy issue that has been present in LLM-powered chatbots since their popularity exploded.

Without proper controls over models and APIs, threat actors can exfiltrate data by manipulating the models or exploiting overly broad access policies in storage buckets or pipelines. This has implications beyond data security and may result in regulatory penalties if an organization is handling protected information or suffers IP theft.

Identity and access exploitation

AI workloads often run with broad cloud permissions that grant unrestricted access to GPUs, APIs, and databases. If there is a data leak, whether from a service account, an API key, or a security token, AI-enabled automated workflows become an easy stepping stone for lateral movement cyberattacks. Especially egregious cases have the potential to expose the whole environment, allowing attackers to pivot across all IT resources and cause costly damage to the business and potentially customers and partners, too.

Uncontrolled automation risks

AI is usually linked with automation pipelines. If not secured and left to run unchecked, AI assistants and agents could trigger destructive changes that delete resources and expose data. Broad permissions and compromised access may further exacerbate the risks, allowing attackers to manipulate AI into enacting these detrimental changes to inflict further damage.

Shadow AI

Shadow IT, meet shadow AI. Workloads spun in the cloud by teams without looping in security may use off-policy applications or unscreened AI providers, process sensitive data without safeguards, or be exposed to the internet without monitoring. Traditional security tools will often miss these invisible risks. The "unknown unknowns" present a substantial challenge in exposure management.

Securing AI Workflows with JupiterOne

The risks of unchecked AI workflows can be controlled, and AI tools can be secured. Focus on governing access, auditing model inputs and outputs, and revising and enforcing policy for AI usage. This is key to protecting efficient AI workflows that deliver good outcomes.

Here is how JupiterOne can help organizations to secure their AI workflows and address AI's potential risks:

1. Governing access to AI tools

Organizations must control who and what can access AI tools under what conditions. This is the foundational rule for IT security that applies to any tool, piece of software, or agent, but it is especially important for AI.

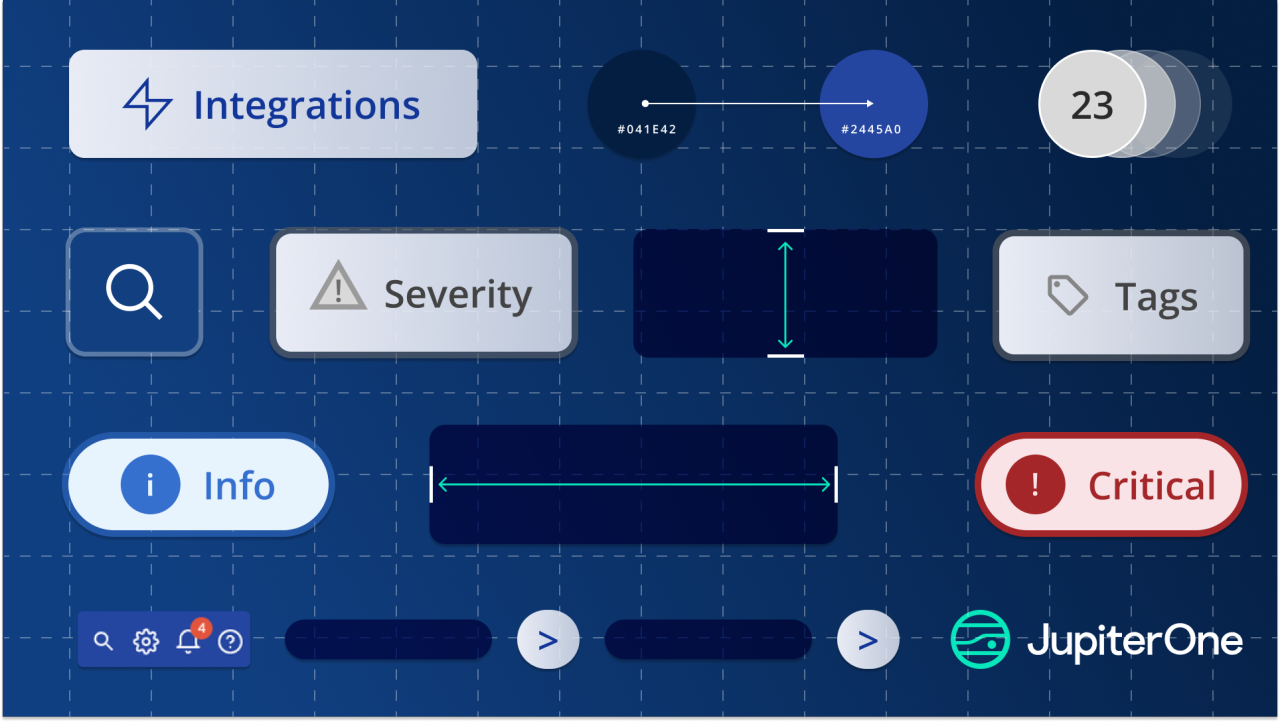

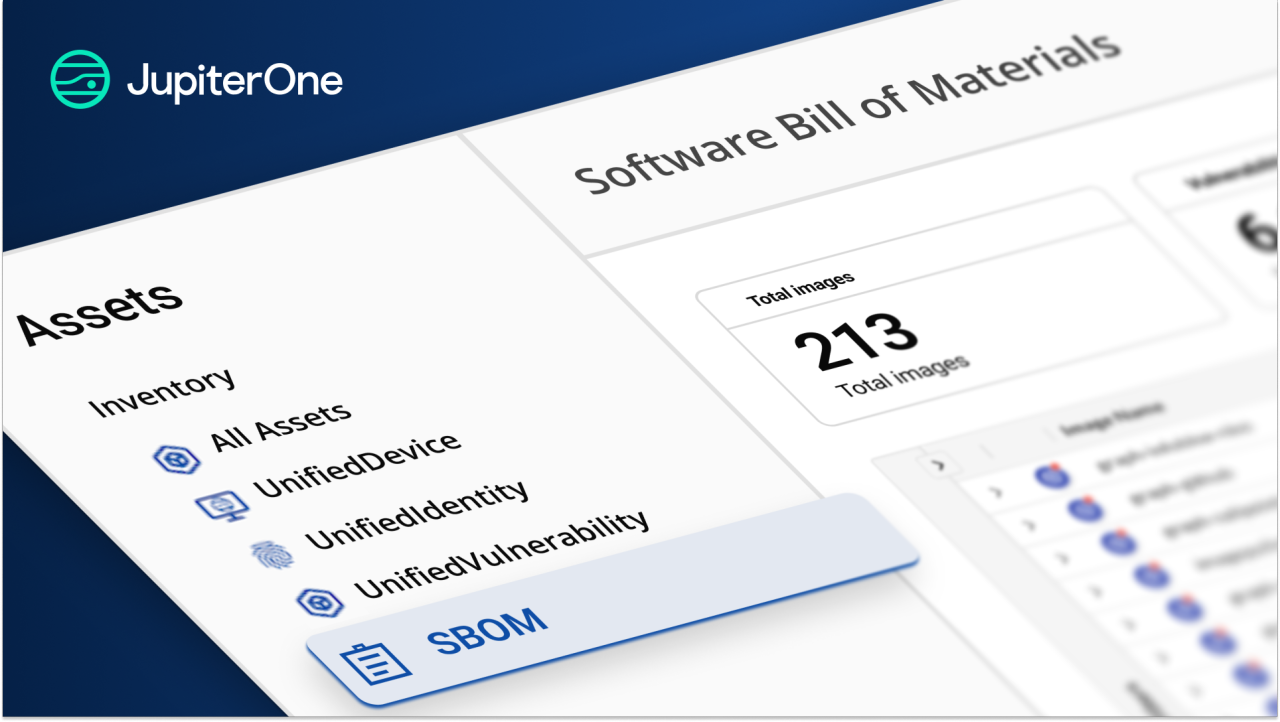

Enter JupiterOne: the powerful platform providing full asset visibility, graph-based visualization, and governance of assets across environments. Ingesting data from identity access management systems, cloud providers, SaaS applications, and all other assets in the environment allows JupiterOne to build a graph of identities, roles, and entitlements.

2. Auditing model infrastructure

JupiterOne extends the graph-based visualization model, which is core to digital asset management, to AI workflows. Model workloads, its data sources, and downstream consumers are all visualized as relationships between identities and systems, making it easier to analyze and adjust according to data handling policies.

4. Enforcing policy across data and infrastructure

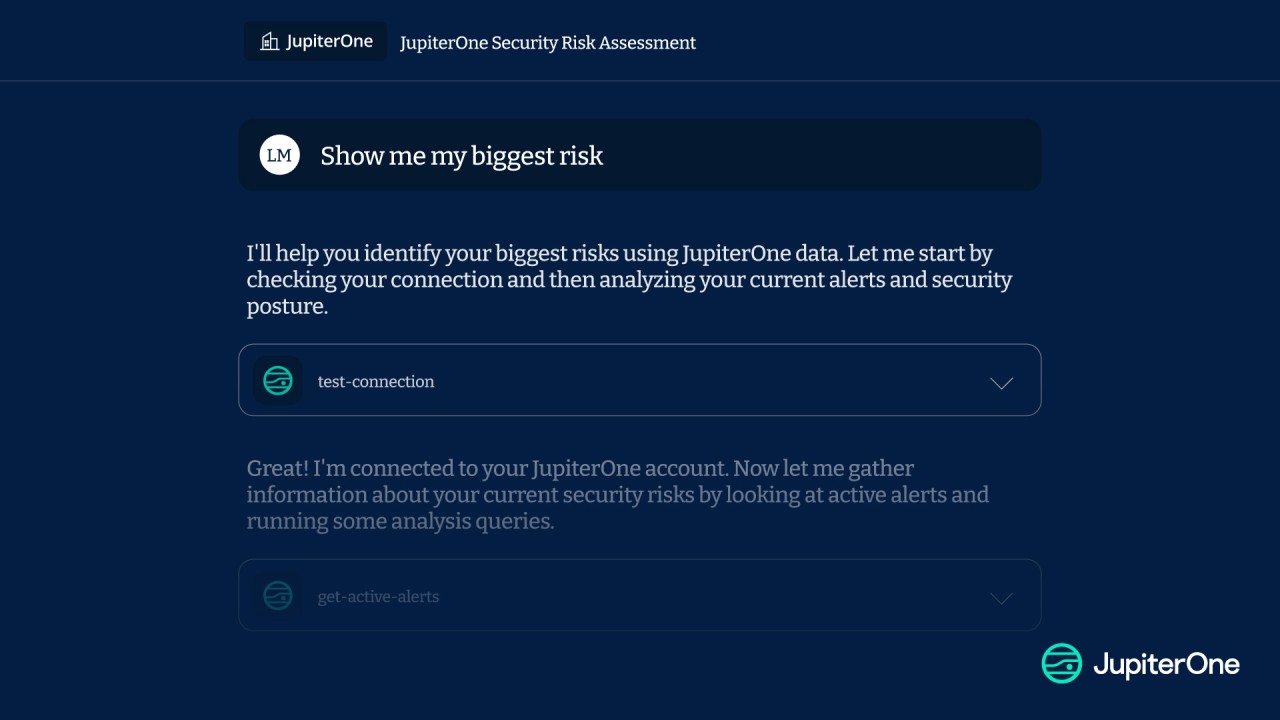

A digital asset management system worth its salt not only allows users to map the full scope and context of their asset inventories, but it also gives them tools to define rules and guardrails for AI. In JupiterOne, teams can provide instructions both through code and UI, using natural language enabled by JupiterOne MCP (Model Context Protocol).

For example:

- "AI models can’t access production PII buckets directly."

- "No external API key should have write access to training datasets."

JupiterOne continuously evaluates the environments against these policies and established rules. Should any be violated, it can alert SOC teams, automate remediation, and log evidence for audits in the format preferred by the team. JupiterOne's context awareness shows which AI model could misuse a configuration and which users and service accounts have access to specific functions, making the identification of security gaps and their prompt closing easier.

Conclusion

The AI hype can make it feel like AI can do it all, but it isn’t advanced enough to warrant such a degree of trust. There are secure, controlled ways to implement AI workflows that give AI just the right amount of freedom to unlock its benefits without exacerbating its risks.

AI has enormous potential for organizations, and JupiterOne elevates it by delivering AI workflows that are secure, compliant, and aligned with organizational guardrails. To turn opaque AI operations into visible, powerful, and governed digital asset management processes, check out the JupiterOne platform today.